About SaltStack

The amazing world of configuration management software is really well populated these days. You may already have looked at Puppet, Chef or Ansible but that’s not all of it, today we focus on SaltStack. Simplicity is at its core without any compromise on speed or scalability. Some users have up to 10.000 minions or more. Salt remote execution is built on top of an event bus which makes Salt unique.

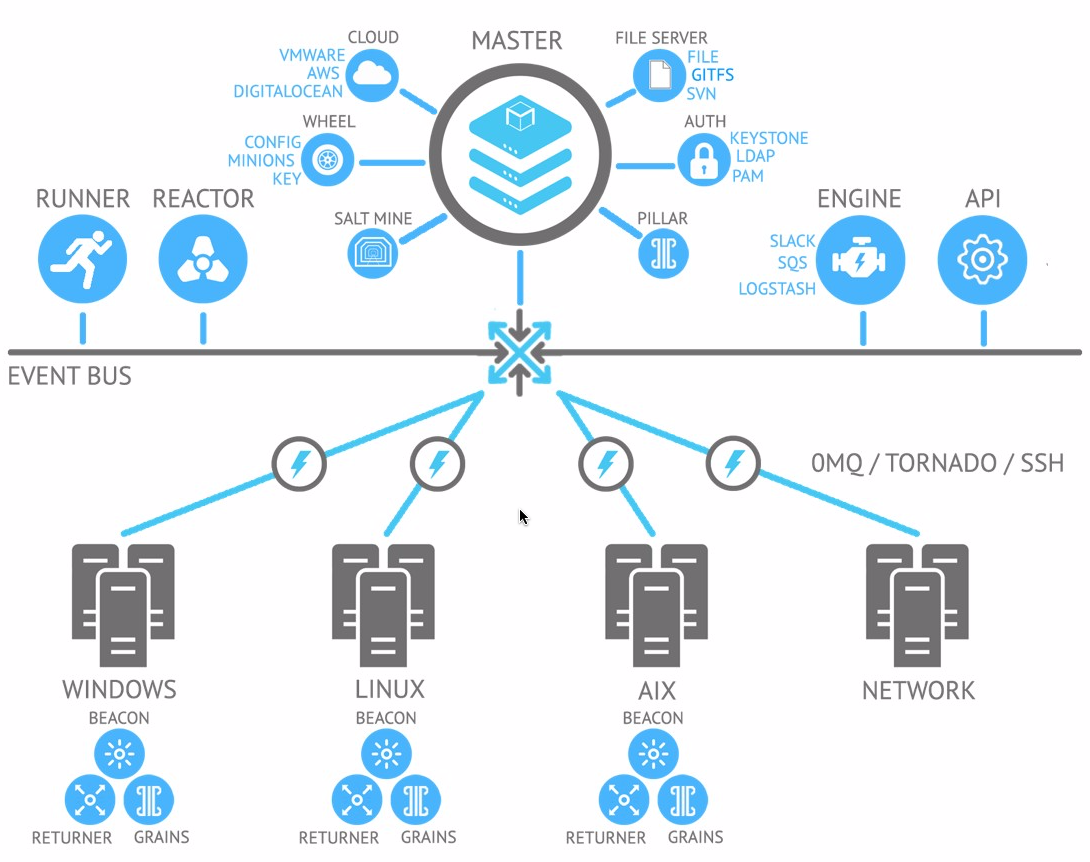

Architecture

Salt uses a server-agent communication model, server is called the salt master and the agents salt minions.

Salt minions receive commands simultaneously from the master and contains everything required to execute everything locally and report back to Salt master. Communication between master and minions happens over a high-performance data pipe which use ZeroMQ or raw TCP, messages are serialized using MessagePack to enable fast and light network traffic. Salt uses public keys for authentication with the master daemon, then uses faster AES encryption for payload communication.

State description is using YAML and remote execution is possible over a CLI, programming or extending Salt isn’t a must.

Salt is heavily pluggable, each function can be replaced by a plugin, implemented as a Python module to change for exemple the data store, the file server, authentication mechanism, state representation. So when I said state representation is using YAML, I’m talking about Salt default which can be replaced by JSON, Jinja, Wempy, Mako, or Py Objects. But don’t freak out, Salt comes with default options for all these things which allow you to jumpstart the system and customize it when the needs arise.

Terminology

At first, you can be overwhelmed by the obscur vocabulary that Salt introduce, here are the main salt concepts which makes it unique.

- salt master - sends cmds to minions

- salt minions - receives cmds from master

- execution modules - ad hoc cmds

- formulas (states) - representation of a system configuration, a grouping of one or more state files with maybe pillar data and configuration files or anything else which define a neat package for a particular application.

- grains - static information about minions

- pillar - secure user-defined variables stored on master and assigned to minions (equivalent to data bags in Chef or Hiera in Puppet)

- mine - area on the master where result from minion executed commands can be stored, like IP address of a backend webserver used then to configure a load balancer

- top file - matches formulas and pillar data to minions

- runners - modules executed on master

- returners - inject minion data to another system

- renderers - components that runs the template to produce valid state of configuration files, the default one use Jinja2 syntax and output YAML files.

- reactor - trigger reaction on events

- thorium - a new kind of reactor, still experimental.

- beacons - little piece of code on the minion that are listening for thing, like server failure or file changes to inform the master. With reactor can be used to do self healing

- proxy minions - translate Salt Language to device specific instruction to bring it to the desired state using its API or over SSH.

- salt cloud - bootstrap cloud nodes

- salt ssh - run cmds on systems without minions

You’ll find a great overview of all of this on the official docs.

Installation

Salt is built on top of lots of Python modules, Msgpack, YAML, Jinja2, MarkupSafe, ZeroMQ, Tornado, PyCrypto and M2Crypto are all required. To keep your system clean, easily upgradable and to avoid conflicts, the easiest installation workflow use system packages.

I’ll be using Ubuntu 16.04 [Xenial Xerus], for other Operating Systems consult the salt repo page.

Start by fetching the official SaltStack GPG key

wget -O - https://repo.saltstack.com/apt/ubuntu/14.04/amd64/latest/SALTSTACK-GPG-KEY.pub \

| sudo apt-key add –

Add the official saltstack repository by adding the below line into /etc/apt/sources.list.d/saltstack.list

deb http://repo.saltstack.com/apt/ubuntu/16.04/amd64/latest xenial main

Update the package management database

apt-get update

Install your Salt master and the Salt Minion

apt-get install salt-master salt-minion

Terminate the installation process by creating a directory where you’ll store your state files.

mkdir -p /srv/salt

You should now have Salt installed on your system, check to see if everything looks good

salt --version

alternative installations

If you can’t find packages for your distribution, you can rely on Salt Bootstrap which is an alternative installation method, look below for further details:

https://github.com/saltstack/salt-bootstrap

At the end of this post, you’ll find another way to install salt using salt itself ;)

Configuration

If you have firewalls in the way, make sure you open up both port 4505 (publish port) and 4506 (return port) to the Salt master to let the minions talk to it.

Configure your Minion to connect to your master

vi /etc/salt/minion.d/minion.conf

Change the following lines as indicated below

master: localhost

id: saltstack-m01

master indicate where this minion should connect

id if you don’t specify it in your config file, minion tries to guess it, in most cases it will be the FQDN of your system.

Before being able to play around, you can now restart the required Salt services

service salt-minion restart

service salt-master restart

Make sure services are also started at boot time

systemctl enable salt-master.service

systemctl enable salt-minion.service

Master need to trust the minion before anything can be done on them, you have to accept the corresponding key of each of your minion as follow

salt-key

Accepted Keys:

Denied Keys:

Unaccepted Keys:

saltstack-m01

Rejected Keys:

Before accepting it, you can validate it looks good, first inspect it

salt-key -f saltstack-m01

Unaccepted Keys:

saltstack-m01: 98:f2:e1:9f:b2:b6:0e:fe:cb:70:cd:96:b0:37:51:d0

Compare it with the minion one

salt-call --local key.finger

local:

98:f2:e1:9f:b2:b6:0e:fe:cb:70:cd:96:b0:37:51:d0

It looks the same, so you can accept it

salt-key -a saltstack-m01

Repeat the above process of installing salt-minion and accepting the keys to add new minion to your environment. Consult the documentation to get more details regarding the configuration of minion or more generally this documentation for all salt configuration options

Salt commands

- salt-master - daemon used to control the Salt minions

- salt-minion - daemon which receives commands from a Salt master.

- salt-key - management of Salt server public keys used for authentication.

- salt - main CLI to execute commands across minions in parallel and query them too.

- salt-ssh - allows to control minion using SSH for transport

- salt-run - execute a salt runner

- salt-call - runs module.function locally on a minion, use –local if you don’t want to contact your master

- salt-cloud - VM provisionning in the cloud

- salt-api - daemons which offer an API to interact with Salt

- salt-cp - copy a file to a set of systems

- salt-syndic - daemon running on a minion that passes through commands from a higher master

- salt-proxy - Receives commands from a master and relay these commands to devices that are unable to run a full minion.

- spm - frontend command for managing salt packages.

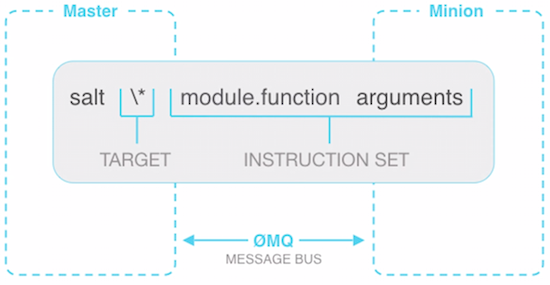

Remote execution

First obvious thing we could do with our master/minion infrastructure is to run command remotely, for example we could run

salt '*' test.ping

saltstack-m01:

True

It confirm your saltstack-m01 minion is alive, he just responded True as expected from our test.ping function.

embedded documentation

To get more insight about this function, refer to its documentation

salt '*' sys.doc test.ping

test.ping:

Used to make sure the minion is up and responding. Not an ICMP ping.

Returns ``True``.

CLI Example:

salt '*' test.ping

The test module contains other function, to list all of them

salt --vebose '*' sys.list_functions test

command structure

As you have maybe guessed by now, the structure of a salt command is composed of

command-line options –verbose, see below for more

target which minion to target

module.function which function to run on target, for example sys.list_functions

arguments which argument to pass to the function, we passed test in our example above

Command line options

As most Unix commands, Salt comes with lots of options available

--help see available command-line options

--verbose or -v turns on verbosity

-t TIMEOUT change timeout of the running command

--async runs without waiting for a respond

--show-timeout which minion timed out

remote execution tips & tricks

modules, state functions

salt '*' sys.list_modules # List all the preloaded Salt modules

salt '*' sys.list_functions # List all the functions

salt '*' sys.list_state_modules # List all the state modules

salt '*' sys.list_state_functions # List all the state functions

network

salt '*' network.ip_addrs # Get IP of your minion

salt '*' network.ping <hostname> # Ping a host from your minion

salt '*' network.traceroute <host> # Traceroute a host from your minion

salt '*' network.get_hostname # Get hostname

salt '*' network.mod_hostname # Modify hostname

more example in the documentation

minion status

salt-run manage.status # What is the status of all my minions? (both up and down)

salt-run manage.up # Any minions that are up?

salt-run manage.down # Any minions that are down?

jobs

salt-run jobs.active # get list of active jobs

salt-run jobs.list_jobs # get list of historic jobs

salt-run jobs.lookup_jid <job_id> # get details of this specific job

system

salt 'minion*' system.reboot # Let's reboot all the minions that match minion*

salt '*' status.uptime # Get the uptime of all our minions

salt '*' status.diskusage

salt '*' status.loadavg

salt '*' status.meminfo

packages

salt '*' pkg.list_upgrades # get a list of packages that need to be upgrade

salt '*' pkg.upgrade # Upgrades all packages via apt-get dist-upgrade (or similar)

salt '*' pkg.version htop # get current version of the bash package

salt '*' pkg.install htop # install or upgrade bash package

salt '*' pkg.remove htop

services

salt '*' service.status <service name>

salt '*' service.available <service name>

salt '*' service.stop <service name>

salt '*' service.start <service name>

salt '*' service.restart <service name>

salt '*' ps.grep <service name>

commands

salt '*' cmd.run 'echo really Happy!'

salt '*' cmd.run_all 'echo really Happy!'

Matching

Salt offers many ways to target specific minion in your environment, lets review all of them

glob matching

It’s what we’ve been using so far, it’s similar to the glob matching of your unix shell

salt 'server-??' test.ping

salt 'server-0[1-9]' test.ping

Perl Regular expression matching

Perl is pretty famous when it comes to regular expression, so Salt is able to use this powerful syntax

salt -E 'server' test.ping

salt -E 'server-.*' test.ping

salt -E '^server-01$' test.ping

salt -E 'server-((01)|(02))' test.ping

List matching

Sometime you want to restrict remote execution to a known list of servers

salt -L 'server-01,server-02,server-03' test.ping

Grain and Pillar matching

Grains describe minions caracteristics like operating system, release number, cpu_model, kernel, etc… You can target nodes based on them

salt -G 'os:Ubuntu' test.ping

to list all the grains available for minions

salt '*' grains.items

To get the value of a grain

salt '*' grains.get osfullname

You can add your own

salt '*' grains.setval web frontend

salt '*' grains.delval web

Pillar are similar but stored on the Master. Similarly with Pillar you can

salt '*' pillar.items

salt '*' pillar.get hostname

To target minion using Pillar

salt -I 'branch:mas*' test.ping

IP Addresses

Use -S to match against IP Addresses (IPv4 only for now)

salt -S 192.168.40.20 test.ping

salt -S 10.0.0.0/24 test.ping

In state or pillar files matching looks like

'192.168.1.0/24':

- match: ipcidr

- internal

Compound

We’ve kept the most powerful matching capability for the end, it combines all of the above

salt -C 'server-* and G@os:Ubuntu and not L@server-02' test.ping

The different letters for different matching method are

G Grains glob

E Perl regexp on minion ID

P Perl regexp on Grains

L List of Minion

I Pillar glob

S Subnet/IP address

R Range cluster

Nodegroups

If you have a set of nodes that you target often and don’t want to repeat yourself, you can declare a nodegroup within your master configuration. They are declared inside your /etc/salt/master configuration file using a compound statement

nodegroups:

group1: 'L@saltstack-m01,saltstack-m02 or admin*.yet.org'

group2: 'G@os:Debian and yet.org'

group3: 'G@os:Debian and N@group1'

group4:

- 'G@foo:bar'

- 'or'

- 'G@foo:baz'Your master then need to be restarted.

To then match a nodegroup on the CLI

salt -N group1 test.ping

Batch size

If you want to do a rolling upgrade, you can use

salt -G 'os:Debian' --batch-size 25% apache.signal restart

25% can also be an absolute number

--batch-size start on that many minion first

--batch-wait amount of time before working on the next batch

Curious about targeting ?

Find more details on targeting on the official documentation

Automate your infrastructure

Built on top of Remote execution, Salt offer powerful Configuration Management capabilities. So far we’ve been using execution modules which are iterative, for configuration management we’ll be transitioning to state modules which are declarative and idempotent.

To list the function of a given state module

salt '*' sys.list_state_functions pkg

To get a documentation on any of them

salt '*' sys.state_doc pkg.latest

To illustrate how state management works, lets create a state file (sls) which will install some usefull packages on our system at /srv/salt/tools.sls

tools:

pkg.latest:

- pkgs:

- mtr

- iftop

- vnstat

- htop

- iotop

- curl

- mosh

- byobu

- vim

- logwatch

- unattended-upgrades

- fail2banTo apply the above state

salt '*' state.sls tools

Applying each state one by one to a minion would not be really efficient, let me introduce top.sls files that use targeting to assign state to minions. The structure is pretty simple, it start with the environement name, it’s base by default and continue on with targets and state files name without their extension.

base:

'*':

- tools

To apply all states configured in your top.sls file just run

salt '*' state.apply

For a dry-run

salt '*' state.apply test=True

List of available state modules

Pillar

Not all minion should look the same, so Pillar were invented to attach keys/values to them to dynamically change their state based on their profile.

To use Pillar, you first need a directory to store them, so create a Pillar root directory which is by default

mkdir /srv/pillar

Create your first Pillar file named for example /srv/pillar/pillar_common.sls, it’s just a YAML file containing data

branch: trunk

github: http://github.com/planetrobbie

In the above file you can also use Jinja2 tricks to setup different pillar value depending on grains

{% if grains['id'].startswith('dev') %}

branch: trunk

{% elif grains['id'].startswith('qa') %}

branch: dev

{% else %}

branch: master

{% endif %}

You can now create a /srv/pillar/top.sls file to attach pillar data file to minion using the targeting capabilities of Salt.

base:

'*':

- pillar_common

Now tell the minions to fetch their pillar data from the master with

salt '*' saltutil.refresh_pillar

Verify all minions have the corresponding data set, for example

salt '*' pillar.get branch

Now you can access Pillar data in your state file using the following Jinja2 syntax

{{ pillar['branch'] }}

more complex data structure can be accessed like this

{{ pillar['pkgs']['apache'] }}

You can also provides default value using the pillar.get function

{{ salt['pillar.get']('pkgs:apache', 'httpd') }}

To investigate further the pillar concept, consult this walkthrough

Salt ssh

When you bootstrap a node, you have to deploy the minion software before you can bring it under salt management. But Salt SSH can come to the rescue and do stuff on a new system even before any minion is up and running. salt-ssh is built to commands a server over a SSH communication channel instead of a ZeroMQ one. It starts by packaging a thin salt-agent which is copied and cached on the target system, where it is unpacked and executed to offer the same kind of functionnality then salt itself.

Install the required package on your Salt master

apt-get install salt-ssh

Edit a configuration file to tell salt-ssh which servers it can control

vi /etc/salt/roster

This configuration file is required because minions connect to master, so in the normal message bus scenario the master doesn’t even have a need to store the network and host configuration for minions. But the game now change when dealing with SSH-based connections.

Add YAML content to define the targets

'*m04':

host: 172.16.52.104

user: sbraun

m04 target globbing pattern

host hostname or IP address of the controlled server

user used to connect over SSH

other options available

port instead of the standard port 22

sudo if you don’t want to use root as the user set it as True, this require the selected user to login without password. Create /etc/sudoers.d/username with sbraun ALL=(ALL) NOPASSWD: ALL

priv private key path

password required only if you do not use --priv key argument or --askpass which is a better option then cleartext ones.

minion_opts dictionary of minion opts

Note: Make sure your minion have the Master SSH public key /etc/salt/pki/master/ssh/salt-ssh.rsa.pub in their ~/.ssh/authorized_keys if you don’t want to specify passwords in the roster file.

Now you should be able to run it

salt-ssh '*' disk.usage

The first time you connect to a system, it returns a message with the key fingerprint, use -i to auto accept it next time. You then provide the user password once to inject your salt public key (/etc/salt/pki/master/ssh/salt-ssh.rsa.pub) into the user authorized_keys, it is then no more necessary.

Something fun you can try to identify hosts that are responding to SSH, but this wisely just to do network discovery, never share sensitive data over this channel.

salt-ssh --roster=scan 172.16.52.0/24 test.ping

If you come over from Ansible, there is also a roaster to offer a compatibility layer

salt-ssh --roster ansible --roster-file /etc/salt/hosts '*' test.ping

If you have fail2ban installed and because of many failed attempts you get banned, unban yourself by just deleting the corresponding iptables rule

iptables -D fail2ban-SSH -s 172.16.52.100 -j REJECT

minion state file

Now comes the serious business with the following /srv/salt/minion.sls state file

salt-minion:

pkgrepo:

- managed

- humanname: SaltStack Repo

- name: deb http://repo.saltstack.com/apt/ubuntu/16.04/amd64/latest {{ grains['lsb_distrib_codename'] }} main

- dist: {{ grains['lsb_distrib_codename'] }}

- key_url: https://repo.saltstack.com/apt/ubuntu/14.04/amd64/latest/SALTSTACK-GPG-KEY.pub

pkg:

- latest

service.running:

- watch:

- file: /etc/salt/minion

- enable: True

configure hostname:

file.managed:

- name: /etc/hostname

- source: salt://hostname

- template: jinja

update current hostname:

cmd.run:

- name: hostname {{ pillar['hostname'] }}

configure master location:

file.replace:

- name: /etc/salt/minion

- pattern: "^.?master:.*$"

- repl: "master: {{ pillar['master'] }}"

configure node ID:

file.replace:

- name: /etc/salt/minion

- pattern: "^.?id:.*$"

- repl: "id: {{ pillar['id'] }}"Pillar data

Now create the pillar file at `/srv/pillar/minion-m04.sls

master: 172.16.52.100

id: saltstack-m04

hostname: saltstack-m04

and the /srv/pillar/top.sls to match the above pillar to all minion

base:

'*m04':

- minion-m04

Apply minion state

Apply state to your minion, make sure you apply only to this m04 minion not to override other configuration

salt-ssh --verbose '*m04' state.sls minion

Accept key on master

You only have now to accept its corresponding key on your master

salt-key -A

Check everything looks good

You now have a new minion ready to be commended over the speedy ZeroMQ message bus, check it

salt 'saltstack-m04' test.ping

saltstack-m04:

True

Hurrah !!!

minimal required template content

The above process can help you provision hundreds of minions from a template. At least, in this OS template, you need the password or a public key injected for a user who doesn’t need password authentication when sudo’ing. Having python 2.6 around also help not to have to fallback on the RAW ssh mode.

Advanced concepts

Formulas

Not to repeat yourself, Salt brings formulas which are a bundle of everything required to install something on a minion. We’ll details them in its own article, in the meantime you’ll find all the official ones on this github repository.

troubleshooting

You start start your master with the debug option

service salt-master stop

salt-master -l debug

Proxy Minions

Proxy Minions are a process written for a particular device, it takes Salt language and translate that in a language that a device understand. It then communicate with this device, a switch for example, over its API or thru SSH to configure it according to the Salt State.

Salt Reactor

Salt Reactor allows to trigger actions on events seen on the event bus. Consult the documentation for all the details,

VMware Fusion trick

I’m running the overall lab on top of VMware Fusion, When connecting a VM to a shared network, IP addresses are assigned dynamically from fusion DHCP. You can configure the DHCP server to assign a specific IP by editing the following file

vi /Library/Preferences/VMware Fusion/vmnet8/dhcp.conf

After the last line add a section like this one, just replace the mac address by the one from your VM

host saltstack-master {

hardware ethernet 00:0c:29:8c:c9:e4;

fixed-address 172.16.52.100;

}

You now need to restart VMware fusion.

Salt vs Ansible

When this article got posted on my company blog, I got the feedback I should add a section to compare Salt with Ansible. So here is a quick overview of the major differences.

| Ansible | Salt | |

|---|---|---|

| Company | Redhat | SaltStack |

| Open Source | yes | |

| Coded in | Python | |

| Markup | YAML | |

| architecture | masterless | agent based |

| transport mechanism | SSH | ZeroMQ message bus |

| terminology | module and playbooks | execution and state modules |

| community reviewed playbook/formulas | galaxy | github |

To be completely honest Ansible and Salt are getting closer, for example Ansible does have a way to use ZeroMQ and Salt SSH is pretty similar to the way Ansible works without requiring any agents.

Overall salt comes with more bells and whistles but it comes at the expense of the learning curve, Ansible is simpler, but also a bit slower due to its use of SSH as the transport mechanism, specifically when making no changes, even when switching over to ZeroMQ it still require an initial SSH connection.

Both are great and mature solutions, so you have to try them by yourself to make your own decisions.

Conclusion

Beacons combined with Reactors allow you to have an automated system that can self heal. Your infrastructure becomes event and fact driven by leveraging the Salt Event Bus, Grains and Pillar. This is the main difference of Salt with any other player.

Minion aren’t listening on any ports, communication between minion and master are authenticated and encrypted which provide a good security baseline, but you still have to protect your master.

Salt flexibility gives you a lot of power, everything is pluggable, you can be imperative (remote execution) or declarative (states). Persistent TCP connection (ZeroMQ) can fallback to SSH when required.

Overall, Salt is a solution that shouldn’t be limited to configuration management, it can do a lot more, things like remote execution, orchestration, or provisionning servers on cloud becomes almost easy with Salt.

After having touched Chef and Ansible, I’m now looking deeply into Salt, so stay tuned for more articles.

Links

- official walthrough tutorial

- Understand release numbers and codenames

- components overview

- repository installation howto

- Salt on github

- Salt formulas on github

- OpenStack-Salt on github

- OpenStack-Salt documentation

- A webui, still early days, SaltPad

- A generic(ish) base environment for Saltstack

- Troubleshooting Salt

Videos

- SaltStack youtube channel

- SaltConf 16 tech talk video playlist

- SaltConf 15 video playlist

- SaltConf 14 video playlist

Documentation

- Salt in 10 minutes

- Salt official documentation

- Salt Best Practices